Catching up: Sparkle's Roadmap and Life's Lemons

/ 3 min read

Hey there, fam! It’s been a wild ride these past few months, but I’m back in the saddle and ready to jam on Sparkle. This update has been coming for a long time.

First, let’s address the elephant in the room: my disappearance. Life decided to pelt us with lemons galore, and I’ve been busy squeezing every last drop into lemonade. My wife’s health took a nose dive, and for the previous 3 months, she’s been battling one illness after another, culminating in COVID for the second time. Amidst the chaos of juggling a day job, freelance gigs, our furry family (five dogs and four cats, no less), and our 10-year-old son’s summer shenanigans, I had to prioritize family first.

I’ve finally carved out some much-needed breathing room now that my wife’s on the mend. And let me tell you, I’m more inspired than ever to dive back into the Sparkle.

Sparkle Update

So, where do we stand? Well, I’ve been busy more-or-less rewriting the codebase from the ground up. The initial version of Sparkle was more of a proof-of-concept prototype, lacking proper error handling and tests. But now, I’m hardening things up and getting it shipshape for its debut on GitHub.

Before that happens, though, there are a couple of key features I’m laser-focused on nailing down. First, I want to ensure a seamless experience with Sparkle Server, which allows you to attach your agents to an OpenAI-compatible chat endpoint for instant interaction with just a few lines of code. I’m also reimagining function calling and tool usage, aiming for a more intuitive API than the current setup.

SparkleServer::register( 'nova-sonnet', fn () => Agent::create( llm: Anthropic::create( AnthropicModel::Sonnet35, ), prompt: view('prompts.characters.nova-v2'), ));Once those pieces are in place, along with comprehensive documentation, I’ll be pushing Sparkle to GitHub for an alpha/beta release. You’ll finally get to kick the tires and take this baby for a spin! From there, it’s full steam ahead towards the coveted 1.0 release.

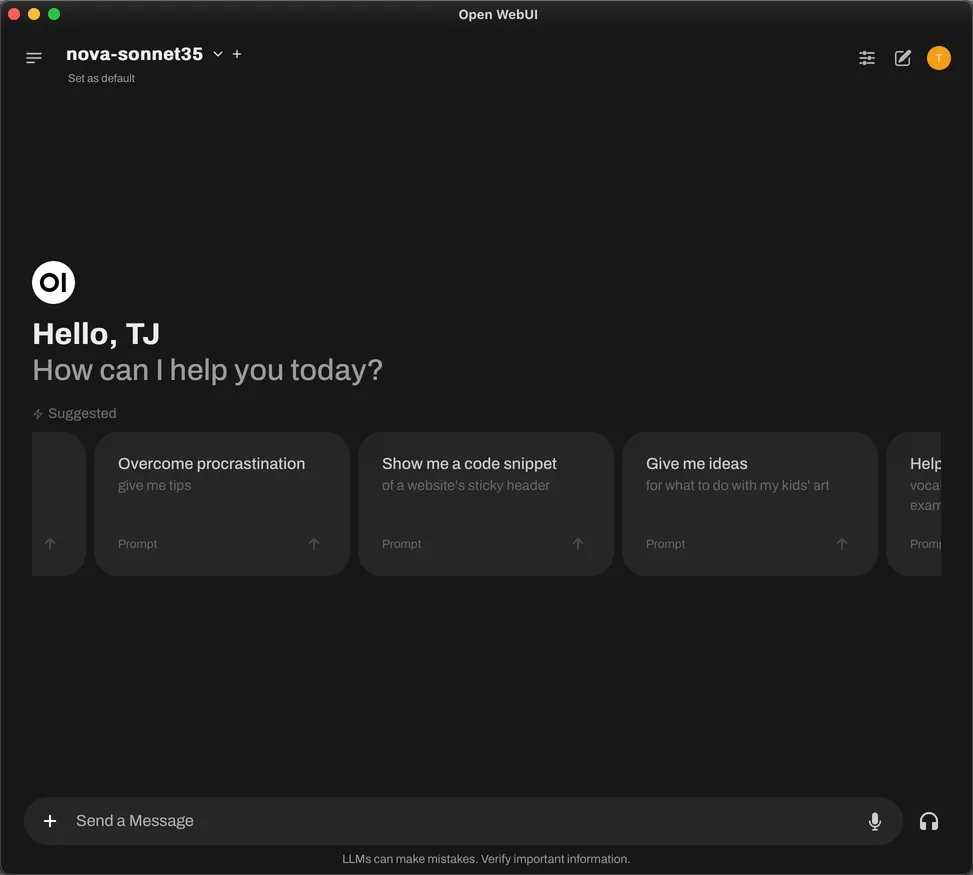

For 1.0, I’m doubling down on chat through Sparkle Server, adding support images, function calling, and tool use. I’m also working on making a tight integration for Sparkle with the amazing OpenWebUI project, ensuring we can capitalize on all their awesome features. Tracing is another crucial aspect I’m tackling, as it’s an invaluable tool when working with LLMs.

But that’s not all! I want Sparkle to play nice with the big three: OpenAI, Anthropic, and Ollama. While I’ll eventually support other providers and models, these are my daily drivers, and I’m committed to ensuring all features and functionality are firing on all cylinders across the board.

Post-1.0, I’ll be heads-down, cranking out a slew of drop-in tools for you all to sink your teeth into, complete with tons of examples and top-notch documentation. Audio support (hello, Whisper!), embeddings, document splitting, and storage for chat-the-docs workflows – you name it, I’m cooking it up.

So, there you have it, the lowdown on Sparkle’s roadmap and my odyssey. I’m aiming to have something up on GitHub within the next few weeks to a month, life’s curveballs permitting. After that, it’s full steam ahead!

I can’t thank you all enough for your excitement and support throughout this journey. Knowing you’re eager to get your hands on Sparkle has been a driving force, and I can’t wait to share what I’ve been brewing. Stay tuned, and get ready to sparkle!